First of all, in order to successfully create an artificial human brain, we need to gather some facts on what are the components of our brains and how those parts tick and interacts with each other.

This will be partly biology, mathematics and electrical in nature plus of course the programming needed (we will use Erlang!) to make the artificial brain learn on its own called machine learning. Also, I would like to add some trivia to make this blog more interesting.

Did you know that the human brain runs meagerly on 20Watts of power and thus very efficient compared to power hungry super computers?

So that’s why when you are thinking hard, you get hungry easily!

In the 1940s, there were papers written about the parts of the brain which are called neurons; there are around billions of these neurons that are interconnected with each other. You can deduce that there will be around trillions of connections between them neurons which is creatively called synapse or edge thus creating the neural networks which we will be building to mimic the human brain i.e. the Artificial Neural Network (ANN).

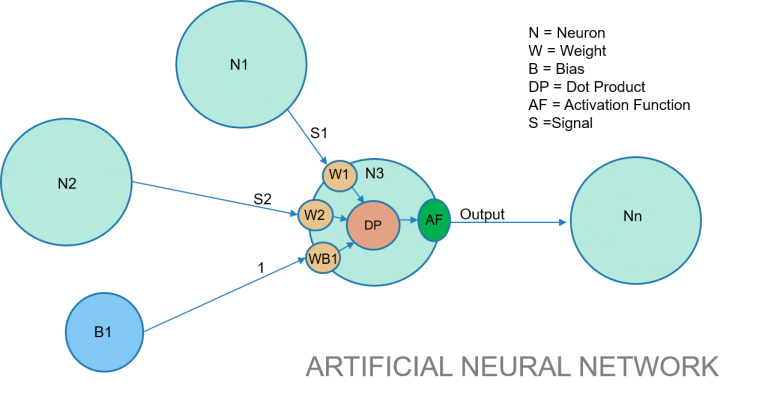

The neuron, a building block of brain, is essentially a signal processor that accepts input signals Sn and a couple of Bias (usually static with a value of 1) and then processes these signals depending on the value of weights Wn (synaptic weight) and WBn (bias weight). It then activates or fires an output signal after passing the activation function AF, sometimes called transfer function in electrical engineering terminology; we will discuss this in detail moving forward.

Let’s concentrate on one particular artificial neuron called N3 in the diagram above to understand what is happening inside it.

Neuron N3 accepts signals S1,S2 from N1 and N2 respectively and a static signal of value 1 from bias node B1. Each signal are then mapped to its own corresponding weights W1,W2 and WB1 for bias. For now, just be concerned that bias is just a special type of output only constant signal neuron (usually a value of 1). Each weight including the bias weight has an initial random value between -1 to 1; these dynamically change during the training period via back propagation until it settle for optimum values on which we can say that the neural network is already in a learned state and ready to accept work it is intended for.

The neuron calculates the DP or dot product of these signals and weights and then feeds it to the AF or activation function which is simply the hyperbolic tangent (tanh) of the DP value. There are many mathematical or trigonometric functions that can be used as AF such as step, sigmoid, reLU and choice depends on the types of problem the neural network is trying to solve, e.g. classification, prediction, etc.

Let us now begin the mathematics part of it, specifically the dot product.

Remember in your Physics class when your instructor would draw many arrows on the board and talk for hours about vectors having magnitude and directions? Well if you were listening, good for you as that applies here inside our artificial neuron except that instead of adding the vectors (which also gives a resultant vector), we are merely interested in multiplying the vectors or obtaining the dot product which is a scalar value.

DP = S1*W1 + S2*W2 + 1*WB1

Output = AF = tanh(DP)

Using Erlang,we can easily create a vector list of signals and weights as follows:

SList = [S1, S2, Sn]

or in our specific example SList = [S1, S2, 1]

WList = [W1,W2,Wn]

$ cat neuron.erl

-module(neuron).

-compile(export_all).

output(SIGNAL,WEIGHT)->

DP = dot_product(SIGNAL,WEIGHT,0),

Output = math:tanh(DP),

io:format(“Signal Output: ~p~n”,[Output]).

%using tail recursion and list comprehension where SH and WH are heads

dot_product([SH|SIGNAL],[WH|WEIGHT],ACC) ->

dot_product(SIGNAL,WEIGHT,(SH*WH) + ACC);

dot_product([],[],ACC) ->

io:format(“Dot Product: ~p~n”,[ACC]),

ACC.

$ erl

Erlang/OTP 21 [erts-10.3.5.7] [source] [64-bit] [smp:2:2] [ds:2:2:10] [async-threads:1] [hipe] [dtrace]

Eshell V10.3.5.7 (abort with ^G)

1> c(neuron).

neuron.erl:2: Warning: export_all flag enabled – all functions will be exported

{ok,neuron}

2> SList=[0.6,-0.5,1].

[0.6,-0.5,1]

3> WList=[0.8,0.5,0.3].

[0.8,0.5,0.3]

4> neuron:output(SList,WList).

Dot Product: 0.53

Signal Output: 0.4853810906053715

Lets do a second iteration of the neuron.erl by adding a generated random weights list vector inside.

$ cat neuron2.erl

-module(neuron2).

-compile(export_all).

output(SIGNAL)->

WEIGHT = [rand:uniform(),rand:uniform(),rand:uniform()], % I know this is ugly, right? what if I need to generate, say 100 random weights, well we do this in next iteration

io:format(“WList: ~p~n”,[WEIGHT]),

DP = dot_product(SIGNAL,WEIGHT,0),

Output = [math:tanh(DP)],

io:format(“Vector Signal Output: ~p~n”,[Output]).

%using tail recursion and list comprehension where SH and WH are list Heads

dot_product([SH|SIGNAL],[WH|WEIGHT],ACC) ->

dot_product(SIGNAL,WEIGHT,(SH*WH) + ACC);

dot_product([],[],ACC) ->

io:format(“Scalar Dot Product: ~p~n”,[ACC]),

ACC.

Eshell V10.3.5.7 (abort with ^G)

1> c(neuron2).

neuron2.erl:2: Warning: export_all flag enabled – all functions will be exported

{ok,neuron2}

2> neuron2:output([0.6,-0.5,1]).

WList: [0.2337690191501245,0.6519301345368334,0.3585959964511436]

Scalar Dot Product: 0.08432242530410583

Vector Signal Output: [0.0841231402965209]

ok

Let’s further modify the neuron code using one of the powerful features of Erlang which is process spawning. In Erlang, creation of process is very light and cheap thus we can easily spawn neurons as processes! One artificial neuron will then correspond to one Erlang process. And I can create 1 million processes for an equivalent of 1 million neuron networks (awesome!). All of this processes are created inside the Erlang VM called BEAM and not in host O.S.; this is what separates it from other programming languages.

$ cat neuron3.erl

-module(neuron3).

-compile(export_all).

create()->

% generate a random floating point number between 0.0 and 1.0 as initial weights

WEIGHT = [rand:uniform() || _<- lists:seq(1,3)],

io:format(“WList: ~p~n”,[WEIGHT]),

Neuron_PID = spawn(?MODULE,output,[WEIGHT]),

io:format(“Neuron PID: ~p~n”,[Neuron_PID]).

output(WEIGHT)->

receive

{FROM,SIGNAL} ->

io:format(“SList received: ~p~n”,[SIGNAL]),

DP = dot_product(SIGNAL,WEIGHT,0),

Output = [math:tanh(DP)],

io:format(“Output Signal: ~p~n”,[Output]),

FROM ! {self(),[Output]},

output(WEIGHT);

terminate ->

ok

end.

%using tail recursion and list comprehension where SH and WH are list Heads

dot_product([SH|SIGNAL],[WH|WEIGHT],ACC) ->

dot_product(SIGNAL,WEIGHT,(SH*WH) + ACC);

dot_product([],[],ACC) ->

io:format(“Scalar Dot Product: ~p~n”,[ACC]),

ACC.

2> c(neuron3).

neuron3.erl:2: Warning: export_all flag enabled – all functions will be exported

{ok,neuron3}

3> neuron3:create().

WList: [0.3221219594447734,0.059638656379478294,0.9645371853464179]

Neuron PID: <0.86.0>

ok

4> i().

Pid Initial Call Heap Reds Msgs

Registered Current Function Stack

<0.0.0> otp_ring0:start/2 376 1585 0

init init:loop/1 2

<0.1.0> erts_code_purger:start/0 233 24 0

erts_code_purger erts_code_purger:wait_for_request 0

<0.2.0> erts_literal_area_collector:start 233 8 0

erts_literal_area_collector:msg_l 5

<0.3.0> erts_dirty_process_signal_handler 233 88 0

erts_dirty_process_signal_handler 2

— snip —

<0.77.0> erlang:apply/2 10958 17920 0

shell:shell_rep/4 17

<0.78.0> erlang:apply/2 987 75066 0

c:pinfo/1 50

<0.86.0> neuron3:output/1 233 5 0

neuron3:output/1 4

Total 39871 546002 0

312

ok

5> pid(0,86,0) ! {self(), [0.5,-0.6,1]}.

SList received: [0.5,-0.6,1]

{<0.78.0>,[0.5,-0.6,1]}

Scalar Dot Product: 1.0898149712411176

Output Signal: [0.7968106014837602]

6> pid(0,86,0) ! {self(), [0.5,-0.6,1]}.

SList received: [0.5,-0.6,1]

{<0.78.0>,[0.5,-0.6,1]}

Scalar Dot Product: 1.0898149712411176

Output Signal: [0.7968106014837602]

7> pid(0,86,0) ! {self(), [0.5,-0.6,1]}.

SList received: [0.5,-0.6,1]

{<0.78.0>,[0.5,-0.6,1]}

Scalar Dot Product: 1.0898149712411176

Output Signal: [0.7968106014837602]

8> pid(0,86,0) ! {self(), [0.9,-0.6,1]}.

SList received: [0.9,-0.6,1]

{<0.78.0>,[0.9,-0.6,1]}

Scalar Dot Product: 1.218663755019027

Output Signal: [0.8392595664433978]

9> pid(0,86,0) ! {self(), terminate}.

SList received: terminate

{<0.78.0>,terminate}

10> =ERROR REPORT==== 29-Dec-2019::00:27:56.025871 ===

Error in process <0.86.0> with exit value:

{function_clause,[{neuron3,dot_product,

[terminate,

[0.3221219594447734,0.059638656379478294,

0.9645371853464179],

0],

[{file,”neuron3.erl”},{line,23}]},

{neuron3,output,1,[{file,”neuron3.erl”},{line,14}]}]}

10> i().

Pid Initial Call Heap Reds Msgs

Registered Current Function Stack

<0.0.0> otp_ring0:start/2 376 1597 0

init init:loop/1 2

<0.1.0> erts_code_purger:start/0 233 25 0

erts_code_purger erts_code_purger:wait_for_request 0

<0.2.0> erts_literal_area_collector:start 233 9 0

erts_literal_area_collector:msg_l 5

<0.3.0> erts_dirty_process_signal_handler 233 89 0

erts_dirty_process_signal_handler 2

<0.4.0> erts_dirty_process_signal_handler 233 9 0

erts_dirty_process_signal_handler 2

<0.5.0> erts_dirty_process_signal_handler 233 9 0

erts_dirty_process_signal_handler 2

<0.6.0> prim_file:start/0 233 8 0

prim_file:helper_loop/0 1

<0.9.0> erlang:apply/2 6772 225856 0

erl_prim_loader erl_prim_loader:loop/3 5

<0.41.0> logger_server:init/1 610 518 0

logger gen_server:loop/7 10

<0.43.0> erlang:apply/2 1598 739 0

application_controlle gen_server:loop/7 7

<0.45.0> application_master:init/4 376 47 0

application_master:main_loop/2 7

<0.46.0> application_master:start_it/4 376 398 0

application_master:loop_it/4 5

<0.48.0> supervisor:kernel/1 610 2647 0

kernel_sup gen_server:loop/7 10

<0.49.0> erlang:apply/2 6772 129449 0

code_server code_server:loop/1 3

<0.51.0> rpc:init/1 233 38 0

rex gen_server:loop/7 10

<0.52.0> global:init/1 233 81 0

global_name_server gen_server:loop/7 10

<0.53.0> erlang:apply/2 233 39 0

global:loop_the_locker/1 5

<0.54.0> erlang:apply/2 233 9 0

global:loop_the_registrar/0 2

<0.55.0> inet_db:init/1 233 322 0

inet_db gen_server:loop/7 10

<0.56.0> global_group:init/1 233 93 0

global_group gen_server:loop/7 10

<0.57.0> file_server:init/1 376 305 0

file_server_2 gen_server:loop/7 10

<0.58.0> gen_event:init_it/6 233 52 0

erl_signal_server gen_event:fetch_msg/6 10

<0.59.0> supervisor_bridge:standard_error/ 233 69 0

standard_error_sup gen_server:loop/7 10

<0.60.0> erlang:apply/2 233 18 0

standard_error standard_error:server_loop/1 2

<0.61.0> supervisor_bridge:user_sup/1 233 87 0

gen_server:loop/7 10

<0.62.0> user_drv:server/2 2586 29565 0

user_drv user_drv:server_loop/6 9

<0.63.0> group:server/3 233 120 0

user group:server_loop/3 4

<0.64.0> group:server/3 2586 130888 0

group:server_loop/3 4

<0.65.0> kernel_config:init/1 233 53 0

gen_server:loop/7 10

<0.66.0> kernel_refc:init/1 233 50 0

kernel_refc gen_server:loop/7 10

<0.67.0> supervisor:kernel/1 233 97 0

kernel_safe_sup gen_server:loop/7 10

<0.68.0> supervisor:logger_sup/1 376 424 0

logger_sup gen_server:loop/7 10

<0.69.0> logger_handler_watcher:init/1 233 57 0

logger_handler_watche gen_server:loop/7 10

<0.70.0> logger_olp:init/1 987 3391 0

logger_proxy gen_server:loop/7 10

<0.72.0> logger_olp:init/1 376 322 0

logger_std_h_default gen_server:loop/7 10

<0.73.0> erlang:apply/2 233 43 0

logger_std_h:file_ctrl_loop/1 4

<0.77.0> erlang:apply/2 4185 20882 0

shell:shell_rep/4 17

<0.78.0> erlang:apply/2 2586 122830 4

c:pinfo/1 50

Total 36674 671235 4

308

ok

to be continued…. but you may check blog.erlangstar.com for the completed blog